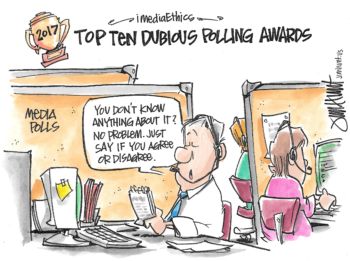

(Credit: Jim Hunt for iMediaEthics)

Editor’s note: Every January, iMediaEthics’ polling director David W. Moore assembles the top ten “Dubious Polling Awards” for iMediaEthics. The tongue-in-cheek awards “honor” the previous year’s most questionable actions in media polling news. The 2018 awards are the 10th in this series.

10. Fake Choice Award

(Credit: bawar slimane/Wikipedia)

Winner: The Marist poll, for its question on gun control that asked: “Do you think it is more important to protect gun rights or control violence?”

Really? Is that what the people at Marist think is the issue facing this country – gun rights and uncontrolled violence, versus no gun rights?

James Madison, who led the fight for Congress to pass the Bill of Rights, which of course includes the Second Amendment, believed that “Government is instituted and ought to be exercised for the benefit of the people; which consists in the enjoyment of life and liberty, with the right of acquiring and using property, and generally of pursuing and obtaining happiness and safety.”

It seems unlikely that Madison believed protecting gun rights would preclude the pursuit of happiness and safety, and instead lead to uncontrolled violence.

It’s a fake trade-off.

9. “Not a Particularly Good Try, and Definitely No Cigar” Award

Fox News in 2012 (Credit: Wikipedia/ Steve Bott)

Winner: Fox News, for its less than sterling effort to design a better mouse trap – in this case, a better exit poll operation.

It’s always heartening when media organizations try to be innovative, and if they make a good effort, even if it doesn’t quite succeed, we all like to hand out faint praise: Good try, but…no cigar!

Lamentably, not in this case.

For last November’s election, Fox News decided to launch its own exit poll operation, abandoning the media consortium it had worked with for two decades. The reason, Fox said, was that the consortium was slow in projecting election night winners.

How did that go, you ask? Sad to report: Not well.

Of the five statewide contests, Fox projected winners in only two of them, and trailed their cable competitors in both. And, unlike MSNBC and CNN, Fox had no voter data to share with viewers.

Definitely, no cigar.

8. The All-In, and I Mean All-In Award

(Credit: http://nyphotographic.com/)

Winner: CNN, and to a slightly lesser extent, most media polls, for their uncanny ability to find a public where virtually all of its citizens are fully engaged and informed enough to express an opinion on almost any issue.

Some people might think that given the low level of knowledge of many Americans, polls should show significant segments of the population without opinions on many subjects. Indeed, as a general rule, if polls are designed to measure non-opinion, results indicate that from a third to a half of the public falls into that category.

But as demonstrated in many posts on this site (here, here, here, here, and here), pollsters and the media in general don’t like that vision of the public. Instead, they like to portray the public as highly informed and engaged, more in line with what theorists of Democracy would have us believe.

Fake visions are so much more appealing than reality.

CNN appears to be the leader in this regard. In a post this year, for example, I noted the incredibly high percentage of Americans who supposedly had a meaningful opinion about the Muslim ban proposed by President Trump. But CNN topped them all, with 100% of all Americans fully engaged and expressing a meaningful opinion on the issue. Other polls showed “only” 89% to 98% engaged.

Kudos to CNN. If you’re going to spin a fantasy world, it’s best to be All-In.

7. The Glass House Award

(Credit: Secretlondon123 via Flickr)

Winner: Natalie Jackson, former polling director of HuffPollster, and now with Just Capital, for her insightful critique of a Public Policy Poll – but which included criticisms that applied to her own polling as well.

One reason most pollsters don’t criticize their colleagues is that almost all the major media polls these days rely, to some extent, on questionable methods. As we are all aware, living in a metaphorical glass house is not the place to be throwing stones.

Yet, Jackson was undeterred. She made some very creditable arguments against a PPP poll that asked about a fake event and – lo! and behold! – found much of the public buying into the fiction.

Jackson decried the results because the poll fed information to the respondents, then put them on the spot by asking for an immediate opinion. She was critical that the poll didn’t even ask how many people knew about the supposed event.

And yet, it turns out that her criticisms of PPP also characterize much of her own polling at HuffPollster, which among other things often didn’t ask how many people knew about the issue being questioned, and would also feed information to respondents and put them on the spot by asking for an immediate opinion.

Oops!

6. “See if you can figure out what I’m asking you about” Award

(Credit: RichardLey via Pixabay)

Winner: ABC News/Washington Post poll, for reporting on public attitudes about the Paris Accords on climate change, without ever asking respondents about the Paris Accords.

No, that’s not a typo.

The poll asked whether respondents supported or opposed Donald Trump’s decision to withdraw from the “main international agreement that tries to address climate change.” The pollster just assumed people knew the reference was to the Paris Accords, and reported the results as though they were about the Paris Accords.

That’s quite a leap in faith.

According to the Pew Research Center’s latest knowledge quiz of the American people, administered last July, more than half the public was unaware that Neil Gorsuch was the new Supreme Court Justice, Robert Mueller was leading the investigation into possible Russian interference into the 2016 election, and Rex Tillerson was Secretary of State.

About four in ten Americans didn’t know that the United Kingdom is the country leaving the European Union and that Paul Ryan is Speaker of the House.

Elsewhere Pew has shown that widespread lack of public engagement in politics is quite typical.

So, is it possible that the Paris Accords issue was a shining exception, that ABC/WP’s assumption of widespread public knowledge about the Paris Accords is actually justified?

In the words of Jerry Seinfeld: “Not bloody likely!”

5. Schrödinger’s Cat Award

(Credit: Wikipedia)

Winner: The Gallup Poll, for finding public opinion in two simultaneous and contradictory states. According to its October poll, the “great majority of Americans” are in favor of more stringent gun regulations, and the public is evenly divided.

This state of quantum superimposition (the analogy is a bit of a stretch, but let’s go with it) was illustrated by Austrian physicist Erwin Schrödinger’s thought experiment of a cat in a lock box, simultaneously alive and dead – the final determination of the cat’s state determined by its interaction with the external world.

In this case, the cat, of course, is public opinion, and the external world is the news media – actually two external worlds: the so-called mainstream media, and the conservative media. Which state of public opinion prevails, depends on which external world it interacts with.

Think about it!

4. “Maybe Statistics Is Not My Best Suit” Award

(Credit: Flickr/Democracy Chronicles)

Winner: UCLA Professor John Villasenor, for his debut polling report on the Brookings website that wrongly applied the Margin of Error (MOE) to his non-probability sample of students.

Okay. This is an arcane bit of professional concern, and may not be of life-or-death consequence to most readers. But the MOE is widely referred to in the media’s coverage of public opinion polls, and at the very least should be applied correctly by anyone claiming to have conducted a valid poll.

In his defense, Villasenor admitted to iMediaEthics that as a Ph.D. in electrical engineering, he had no prior survey research experience and had never before overseen the conduct of a poll.

No polling experience. No knowledge. Apparently: No problem.

(See also, the Number One “Dubious Polling” Award – Ignorance Is No Impediment.)

3. Muddled Is My Middle Name Award

Mark Penn (Credit: MarkPenn.com)

Winner: Mark Penn, a long-time pollster and political strategist, who is probably best known for his work with the Clintons, for his less than coherent diatribe against the national polls.

Penn went after the current national polls for showing Trump with low favorability ratings, by first claiming that they couldn’t be trusted because they were wrong in the presidential election. In fact, the national polls were not “wrong,” as they correctly predicted Clinton to win the popular vote. It was several state polls that failed to show Trump winning their states.

Then Penn reversed course, by referring to current national polls to show that Trump’s base was sticking with the president – which means, of course, he thinks those polls are accurate after all.

And then he added a large non sequitur, that Trump’s current ratings couldn’t be as bad as the polls say, because the Republicans won majority control of the House and Senate in the November election. Say what?!

The essay is a bit confusing, to say the least. Muddled, to be precise.

2. “I hope you understand what I’m asking, but if not, it doesn’t matter – just answer the question!” Award

A North Korean border gate (Credit: Wikipedia/Roman Harak

Winner: Quinnipiac, for its poll finding that almost half of Republicans nationally support a preemptive strike by the United States against North Korea.

The problem appears that many Republicans simply didn’t understand the word “preemptive.” An ABC News/Washington Post poll a few days earlier didn’t use that word, but asked if respondents favored striking North Korea first, before it attacks America or its allies. With that clearly stated scenario, even Republicans were opposed by a two-to-one margin.

A cardinal rule of polling is to design questions with common words, so that everyone understands what the questions mean. It turns out, according to WordCount, that among the 86,800 most frequently used words in English, “preemptive” ranks 82,523 – between methven and vishwa.

Need I say more?

1. Ignorance Is No Impediment Award

(Credit: Jim Hunt for iMediaEthics)

Winner: CBS News Poll, for asking respondents, who had just indicated they did not have a “good understanding” of what the GOP tax plan would do, what the tax plan would do.

Yes, that statement is correct.

Having found that more than half the respondents (57%) did not know much about the GOP tax plan, CBS persisted in asking all respondents, including this majority unaware group, at least ten questions about what effect the plan would have on investors, businesses, the economy, various groups of Americans, and the respondents themselves.

And despite many respondents’ admitted lack of knowledge, by using a “forced-choice” question format, CBS was able to cajole an average of 92% of all respondents into providing a guess for each item.

Which CBS then reported as “public opinion.”

Think about that….

Of course, CBS’s poll is not alone in manufacturing the illusion of public opinion. In fact, it’s a tribute to CBS polling that it even asked whether people knew about the plan. Most pollsters gloss over that aspect of measuring opinion, thus concealing the underlying lack of public engagement on most issues.

But CBS wins this award for explicitly highlighting the knowledge-free basis of much public opinion polling.