Pew and Gallup ranked #1 in David W. Moore's annual Dubious Polling Awards. (Cartoon for iMediaEthics by Jim Hunt) CLICK TO ENLARGE

Editor’s note: Every January, iMediaEthics’ polling director David W. Moore assembles the top ten “Dubious Polling Awards” for iMediaEthics. The tongue-in-cheek awards “honor” the previous year’s most questionable actions in media polling news. The 2017 awards are the 9th in this series.

Moore, a two-time EPPY Award winner for his commentary on iMediaEthics, is also a Senior Fellow with the Carsey School of Public Policy at the University of New Hampshire and a former Gallup vice president and senior editor of the Gallup poll. He analyzes and fact checks polls, always calling for the media to abide by best polling practices. His work can be found in our Polling Center. Check out the previous year’s Dubious Polling Awards. And the winners are:

10. Counting Chickens Before There’s Even A Rooster Award

Winner: Public Policy Polling for its poll on the 2020 presidential election 1,428 days before Election Day.

Natalie Jackson of Huffington Post was, understandably, more than a bit rankled that the poll was conducted and actually reported to the public: “Polling on 2020 is meaningless. Don’t look at it. Pollsters, don’t do it. Journalists, don’t report on it….It’s not really surprising that PPP would release this poll. After all, it traffics in attention-grabbing but dubious polls.”

Enough said.

9. The Backasswards Award

Winner: Rob Santos, the 2013-2014 President of the American Association for Public Opinion Research (AAPOR), for suggesting that even if pre-election polls are not accurate, we can always rely on public policy polls.

Winner: Rob Santos, the 2013-2014 President of the American Association for Public Opinion Research (AAPOR), for suggesting that even if pre-election polls are not accurate, we can always rely on public policy polls.

Not true, Rob! It’s the other way around.

When George Gallup first began conducting pre-election polls in the 1930s, his goal was to demonstrate that polls – consisting of relatively small samples – could be used to measure public opinion of the whole population. To make that point, he compared his election polls with the actual vote – for a reality check.

But for public policy polls, there is no reality check, no standard against which to compare the opinion polls to be sure they are accurate.

So, if election polls aren’t accurate, how can we have any confidence that these same polls can accurately measure opinion on public policy issues? We can’t.

Yet, Santos wrote, “Inaccurate election forecasts may well increase in the near future and not abate for some time. But we will still be able to use polling to identify and understand public sentiment on important policy issues.”

No. No. No. That’s backasswards!

8. Inciting Holiday Hostility Award

(Credit: Flickr/melissa brawner)

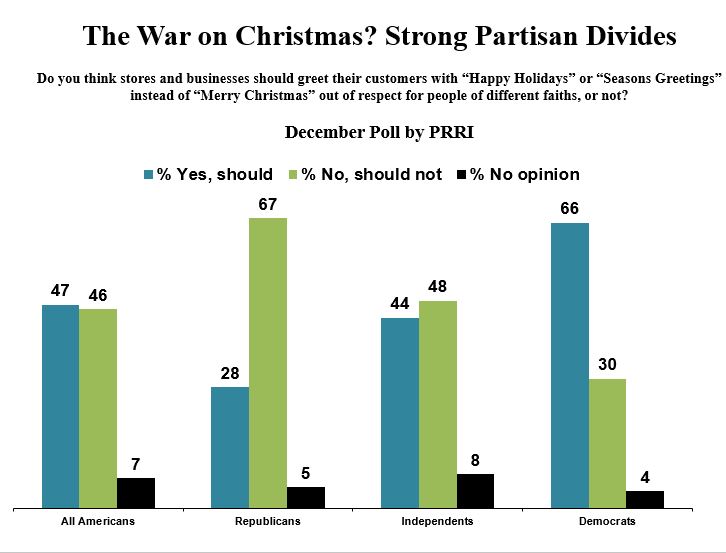

Winner: The Public Religion Research Institute for its provocative poll emphasizing “polar opposites” of Republicans and Democrats over whether to say “Merry Christmas” or “Happy Holidays.”

What better way to greet the Christmas/Holiday season than by creating the illusion that America is “polarized” over the “war on Christmas”! Asking people whether businesses should greet customers with “Happy Holidays” OR “Merry Christmas” is just the ticket to that illusion.

But had the question added the tag, “or do you think either greeting is appropriate?”, it would no doubt have shown large numbers of people not caring one way or the other.

Apparently, that wouldn’t have been a good news story. Christmas/Holiday Hostility – now that’s a story worth reporting.

7. Reading Tea Leaves Award

Winner: Associated Press and NORC for their biased poll on the arcane presidential nomination process.

Winner: Associated Press and NORC for their biased poll on the arcane presidential nomination process.

How much do you think Americans really understand about the differences between caucuses and primaries, between open and closed nomination contests, and what superdelegates are and do?

Right. Not much.

So, it’s interesting when pollsters ask questions that give the illusion of an informed and opinionated public.

AP and NORC purportedly measured how dissatisfied people were with the current presidential nomination system and what they wanted instead. But every question they asked in one format could have been re-asked in a different format and produced the opposite result.

When people really don’t know much about a subject, it’s the pollsters who can shape “opinion” based on the way they frame the issue.

In such cases, we’d have just as good an idea about public opinion if we simply read tea leaves.

6. The Red Herring Award

![]() Winner: James Hohmann of the Washington Post for his claim that Congress doesn’t pass gun control legislation because of an “intensity gap” among Americans that favors opponents of gun restrictions.

Winner: James Hohmann of the Washington Post for his claim that Congress doesn’t pass gun control legislation because of an “intensity gap” among Americans that favors opponents of gun restrictions.

This argument very conveniently excuses members of Congress who refuse to consider even tame gun control measures to reduce gun violence.

Instead, Hohmann blames “average voters” for not being more passionate and politically organized.

The fact is that on several gun control measures, intense support is much greater than intense opposition. Blaming “average voters” for Congress’s lack of action on any of those measures is clearly a distraction of the real reasons why Congress refuses to address gun violence.

5. Dueling Polls Award

(Credit: illustration based on antique print called The Royal Duel)

Winner: Reuters/Ipsos and Pew, for their contradictory poll findings on whether Apple should help the FBI solve a murder case.

Reuters/Ipsos said Americans supported Apple’s refusal by an 11-point margin, while Pew found Americans supporting the FBI’s demand for Apple’s help by a 13-point margin.

It’s as though Apple hired one pollster and the FBI hired the other. And then the pollsters dueled it out in front of the public.

In real duels, of course, there is a winner and loser. But in polling, no one loses, and we can just pick the side that pleases us most.

That’s much more humane, of course. Besides, who takes these things seriously, anyway?

4. Gilding the Lily Award

(Credit: Pixabay)

Winner: CBS News/New York Times, CNN/ORC, Bloomberg, and Quinnipiac for their polls showing widespread support for the nomination of Merrick Garland to the Supreme Court.

When Garland was nominated by President Obama to be a Supreme Court Justice, the GOP-controlled Senate refused to hold hearings, saying that only when a new president was elected would hearings be held.

All of the polls noted above, conducted in March shortly after the announcement, showed majority public support for Garland.

An iMediaEthics poll on the same issue, however, revealed a more realistic picture of public opinion: Just 24% of Americans wanted Merrick Garland confirmed, 18% did not, and a significant majority – 57% – were so unengaged in the issue they didn’t care one way or the other.

Gilding the lily about American democracy isn’t all bad. It’s comforting to think the American public is fully engaged and informed about public policy issues, despite evidence to the contrary. Illusions make life seem better.

3. Defender of the Faith Award

Winner: Nate Cohn of the New York Times, for stalwartly defending the Brexit polls, claiming they should not be blamed for the “surprise” of the Brexit vote.

Winner: Nate Cohn of the New York Times, for stalwartly defending the Brexit polls, claiming they should not be blamed for the “surprise” of the Brexit vote.

He admits the polls trended toward Remain, and that polls showing what the public expected also favored Remain, while the outcome was actually for Leave.

But Cohn doesn’t want to blame the polls for the surprise results. He points to the final HuffPollster average showing Remain with only a half point lead, and notes there was “a distinct possibility that Brexit would win.”

Except: As Huffington Post’s Natalie Jackson wrote the day after the vote, “Polls had indicated the vote would be very close, but most last-minute surveys showed ‘remain’ leading.”

Moreover, she reminded readers that the last two polls – by YouGov and Ipsos – had Remain up by four points and six points respectively. The final vote count showed Brexit winning by four points, a swing of eight and ten points from what these polls showed.

Still, Cohn writes, “In a sense, the E.U. referendum joins a pretty long list of election forecasting errors. But this one was a bit different: It was not a cataclysmic polling failure.”

O.K. Not cataclysmic. But how low do we want to set the bar?

Nate Silver in 2013 (Credit: Wikipedia/Jack Newton)

2. Elephant in the Room Award

Winner: Nate Silver of Fivethirtyeight.com, for saying nothing about his predictions of a Clinton victory in five states where she lost.

Let’s be clear. Nate Silver is one of the most creative poll analysts and statisticians publicly commenting about polls these days. His site’s predictions of a Clinton victory were always tempered with restraint, emphasizing that there was a decent chance she could lose.

Yet, in a recent posting, he claims that the loss of trust in polls in the aftermath of the election “mostly isn’t the pollsters’ fault. It’s the media’s fault.”

He points to his site’s repeated caution that Clinton was in a worse position than Obama was at the same point in his campaign, and that Clinton had – at the last calculation before the vote – a 29% chance of losing the election.

What he doesn’t mention is that, according to his own model, Clinton had less than a two-tenths of one percent chance of losing all five states of Florida, North Carolina, Pennsylvania, Michigan and Wisconsin. Or, put differently, she had a 99.85% chance of winning at least one of those states – but she lost all five.

This highly improbable result, according to the fivethirtyeight model, suggests that maybe the loss of trust in the polls, and in the prediction models that used the polls, wasn’t as much the media’s fault as Silver claims.

1. Fear of Failing Award

Winners: Michael Dimock, President of Pew Research Center, and James Clifton, President and CEO of The Gallup Organization, for their unwillingness to have their institution’s pre-election polls tested against the vote count.

OK…we know that polling has gotten more difficult. As Cliff Zukin, former president of the American Association for Public Research, warned last year in a New York Times op ed, “What’s the Matter With Polling?”, pre-election polling “is in near crisis.”

He noted that extremely low response rates, combined with new technology, have made it difficult and very costly to obtain representative samples. “Political polling has gotten less accurate as a result,” he wrote, “and it’s not going to be fixed in time for 2016.”

So, in these troubling times for polls, when it’s important to test new ways of measuring opinion against a clear standard, how did Pew and Gallup – the two most recognizable and arguably most prestigious names in the polling industry – react?

They gave up.

Yup! Dimock and Clifton lost their courage.

Perhaps they simply didn’t have confidence in their own people to compete against the other polls.

Perhaps they didn’t want to risk their institution’s reputation by being wrong.

Gallup claimed it wanted to “put our time and money and brainpower into understanding the issues and priorities” of the election.

Similarly, Pew argued that it would be “putting our energy where we feel we can do the most to further public understanding of the attitudes and dynamics shaping the 2016 election.”

My, my.

I worked at Gallup for thirteen years over three presidential elections, and we did pre-election polls and tried to understand the “issues and priorities” and the “attitudes and dynamics” of the election.

In fact, Gallup had been doing that for eighty years. Pew had done the same over the past three decades.

Yet, somehow, this year, Pew and Gallup lacked the courage to walk and chew gum at the same time.

Instead, they could continue to poll on the election and generate widespread media attention, but avoid measuring the horse race preferences among likely voters.

And, as reported in an earlier post on this site, that’s exactly what they did.

When Gallup first announced its timorous decision, Harry Enten wrote on fivethirtyeight, “Gallup Gave Up. Here’s Why That Sucks.”

“Gallup says it will still conduct issue polling, but here’s the problem: Elections are one of the few ways to judge a pollster’s accuracy. And that accuracy is important. We use polls for all kinds of things beyond elections….By foregoing horserace polls, Gallup has taken away a tool to judge its results publicly.”

His comments apply equally to Pew.

Zukin noted in his op ed that “The difficulty in doing [election polling] well has caused major players to not participate. That means there’s even less legitimacy because people who know how to do this right aren’t doing it.”

Hard to believe: Gallup and Pew, the industry giants, overwhelmed by a fear of failing.