Gothamist questioned the purpose of polling in response to ASRL's August 2011 bike lane expansion poll. David Moore responds... (Credit: Gothamist, screenshot)

Of all the reactions to this website’s realistic bike lane poll in New York City, the one that most caught my attention was from the New York City website Gothamist: “If most surveys…are going to produce ‘no opinion’ results, what’s the point? Should every poll be entitled ‘Americans Still Don’t Care, Need A Nap’?”

Of all the reactions to this website’s realistic bike lane poll in New York City, the one that most caught my attention was from the New York City website Gothamist: “If most surveys…are going to produce ‘no opinion’ results, what’s the point? Should every poll be entitled ‘Americans Still Don’t Care, Need A Nap’?”

First – a short review. Last month, this site’s publisher, Art Science Research Lab, commissioned a poll using rigorous polling methods to determine how New Yorkers feel about bike lane expansion.

The poll we reported showed that instead of majority public support for the expansion of bicycle lanes in New York City, as reported by Marist and Quinnipiac polls, in fact “Most New Yorkers Don’t Care About Bike Lane Expansion, If Given A Choice.”

That headline was followed by an analysis that showed a very divided public, with 23% supporting and 21% opposing the bike lane expansion, and a majority of residents – 56% – not caring one way or the other.

That’s what got Gothamist to wonder whether “we should just stop commissioning polls” altogether. Apparently it’s not very interesting to journalists to discover that a majority of the public is unengaged on an issue.

That response is exactly the reaction that, I believe, most pollsters fear. If polls depict the real public, rather than the mythical one that characterizes most news reporting, why should anyone pay much attention to what the public thinks?

For the real public typically includes a large proportion of the people – often a plurality and sometimes a majority – who are unengaged on any given policy issue.

On what basis do I make that claim?

In 2002, when I was senior editor of the Gallup Poll, my Gallup colleague, Jeff Jones, and I undertook a series of experiments designed to measure the unengagement factor among Americans nationally. We assumed that if people expressed an opinion about a policy, and then said they would not be upset if the opposite happened to what they had just expressed, that meant the issue really didn’t matter to them.

Essentially, they would be okay with whatever decision the political leaders made (since they would not be “upset” either way). We characterized that position as having a “permissive” opinion – the people were essentially permitting political leaders to make the decision, with no hard feelings whatever the outcome.

On about 20 policy issues, the pattern was consistent – from one-third to two-thirds of the public said they would not be upset whether the policy was adopted or not. For more than 80% of the issues we tested, more people expressed a “permissive” opinion than an opinion either in favor or opposed. (That same pattern prevailed on the bike poll for this website, mentioned earlier.)

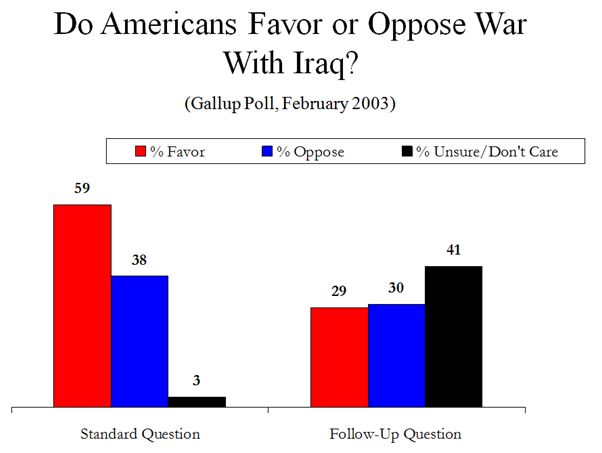

Perhaps the most stunning result of the Gallup experiments was on the issue of going to war with Iraq in February 2003, just a month before the actual invasion. At the time, all the polls, using the standard forced-choice questions, were showing strong public support for going to war. Media coverage was intense, and – as later admitted by the New York Times and Washington Post – the coverage was highly biased in favor of the war. 1

Later, the high level of public support led some pundits to blame the American people, as much as the Bush administration, for invading Iraq. Ironically, Foreign Policy’s Daniel W. Drezner cites Gallup polls to support his vision of “the American mass public as unindicted co-conspirator”.

But what did the Gallup Poll really show?

Despite the widespread pro-war media coverage, just a month before the United States launched the invasion of Iraq, Gallup found a public that was about evenly split – 29% in favor of going to war, and 30% opposed. A plurality – 41% – expressed a permissive opinion (saying they would not be upset either if the U.S. went to war, or if it did not go to war).

|

Some clarification here:

The results from the standard question reported in the chart are based on the typical forced-choice version: “Would you favor or oppose sending American ground troops to the Persian Gulf in an attempt to remove Saddam Hussein from power in Iraq?”

Note there is no explicit option, “or don’t you have an opinion?” Nor is there any attempt to measure how strongly people feel about the issue. The result: 59% favored and 38% opposed the war, with just 3% holding no opinion.

The follow-up question to the standard one did the following: People who favored the war were asked how upset they would be if the U.S. did not go to war: very, somewhat, not too, or not at all upset.

People who opposed the war were asked how upset they would be if the U.S. did go to war. (For both questions, people who said “not too” or “not at all upset” were classified as not upset.)

The follow-up question revealed that 30% of respondents favored the war, but would not have been upset if the U.S. did not go to war. Another 8% of respondents said they opposed the war but would not be upset if the U.S. did go to war.

Combined with the 3% who initially expressed no opinion, that meant 41% of the public either said they had no opinion or said they would not be upset whether the U.S. did, or did not, go to war.

The overall result: 29% of Americans supported the initiation of war, 30% opposed it, and 41% were willing to leave the decision to political leaders.

These results clearly contradict the vision of the public as an “unindicted co-conspirator.” The public was not “clamoring” for war, though it was willing for the government to start one, if the political leaders so decided.

The faulty vision of the public as clamoring for war is based on a mis-reading of public opinion, one that does not differentiate the superficial, top-of-mind expression from the firmly held view. The initial question at best measures the whim of the public; the follow-up question helps to get closer to what the framers meant when they referred to the will of the public.

So, what should pollsters do? Are polls that give a realistic view of the public boring, as the Gothamist implies? I suspect many journalists would think so. And many pollsters think that many journalists would think so. Thus, pollsters produce – and reporters embrace – the superficial measures that portray the public as essentially fully engaged, informed, and opinionated. It’s so much more interesting!

But, unfortunately, that vision is at best faulty and misleading. And at worst, it allows pundits and politicians to shift responsibility for bad decisions from political leaders to the public.

Compared with most current public opinion polls that rely on a mythical public, realistic polls may not be as exciting, but a vibrant democracy can hardly afford anything less.

1 Michael Massing, Now They Tell Us: The American Press and Iraq (New York Review of Books, 2004); also W. Lance Bennett, Regina G. Lawrence, and Steven Livingston, When the Press Fails: Political Power and the News Media from Iraq to Katrina (University of Chicago Press, 2007).

David W. Moore is a Senior Fellow with the Carsey Institute at the University of New Hampshire. He is a former Vice President of the Gallup Organization and was a senior editor with the Gallup Poll for thirteen years. He is author of The Opinion Makers: An Insider Exposes the Truth Behind the Polls (Beacon, 2008; trade paperback edition, 2009). Publishers’ Weekly refers to it as a “succinct and damning critique…Keen and witty throughout