(Credit: http://nyphotographic.com/)

In the days following the election, many pundits argued “the polls were wrong again and much of America wants to know why.” (If you search for “why the polls were wrong in 2020”, you’ll find scores of articles with similar points of view.)

But Nate Silver of FiveThirtyEight – not a pollster himself as he is quick to note, but someone who uses polls to project election results – has a different view. A week after the election, he wrote:

“I don’t entirely understand the polls-were-wrong storyline. This year was definitely a little weird, given that the vote share margins were often fairly far off from the polls (including in some high-profile examples such as Wisconsin and Florida).

“… the margins by which the polls missed — underestimating President Trump by what will likely end up being 3 to 4 percentage points in national and swing state polls — is actually pretty normal by historical standards.”

Then Reid Wilson of The Hill chimes in with his recent piece, “Why the polls weren’t as wrong as you think.” He argues that the national polls were off by only about four points, and that

“Pollsters came close to nailing Biden’s share of the vote in battleground states like Pennsylvania, Michigan, Arizona and Georgia. Those pollsters only overstated Biden’s vote share in Wisconsin and Florida, and even then by only 2 points and 1 point, respectively.”

Here’s the problem: “The polls” referred to by Nate Silver and Reid Wilson are mostly polls that get relatively little attention.

The polls that actually shape public opinion about the election, and no doubt the expectations of pundits and political leaders as well, are primarily the major media polls.

I offer this assertion as self-evident, based on the fact that the major media polls – those sponsored by the five major networks (ABC, CBS, CNN, Fox, and NBC) and the three arguably most influential newspapers in the country (New York Times, Wall Street Journal, and Washington Post) – all have an immediate and immense audience for their results.

The major media pollsters/partners referred to in this article are ABC/WP, CBS, CNN, Fox, NBC/WSJ, and NYT/Siena. (NBC partnered with the Wall Street Journal for national polls and with the Marist Poll when measuring state contests.)

According Nate Silver’s FiveThirtyEight, all six pollsters receive high ratings.

When these pollsters speak, the public listens.

Of course, there are other polls covered in the media. But those other pollsters don’t have the guaranteed wide audience that the major media pollsters have.

Yet, while these pollsters all reported their results throughout the election campaign, and generally gave highly positive pictures of Biden’s chances of winning the presidency, they were largely absent in the calculation of “the polls” final predictions.

The reason some of the major media polls were absent is that often their latest polls were not considered close enough to Election Day to be counted by Real Clear Politics. Even if they were not counted, however, they still exerted a major influence on the public’s perception of the election outcome. That’s why I have included them in my assessment of how accurately they informed the public about the state of the election.

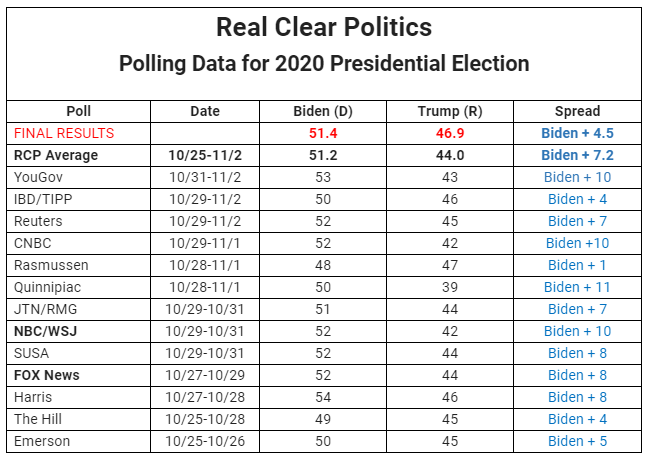

Below is a modified chart on RealClearPolitics that shows the final polls before the election. Only polls that were conducted close to the election — in this case, only those that began on Oct. 25 or later — are included.

Of the six major media polls, only Fox and NBC/WSJ made the cut-off time and thus contributed to the Real Clear Politics average. And both of those results were larger than the average of the rest of the polls – Fox by about a point, and NBC/WSJ by about three points.

The rest of the major media polls also produced results considerably above the Real Clear Politics average.

Below is a chart that compares the latest results of the major media polls before the election with the Real Clear Politics poll average, and with the actual vote. Dates of the polls are noted underneath the name of the pollster.

CNN just missed the cut-off, starting its latest polls on Oct. 23 but lasting through Oct. 25 and showing Biden ahead by 12 points. Though it wasn’t counted by Real Clear Politics, it clearly was part of the ongoing narrative about Biden’s strong lead.

Note that the CBS poll was conducted in early September, but produced the same results as the NBC/WSJ poll just before the election.

In fact, the Biden lead seemed fairly steady over the whole campaign, so it didn’t matter too much whether the polls that “counted” in the RCP average were conducted close to the election or much earlier. The variation reflected mostly differences among pollsters rather than changes over time.

Given these results, it’s easy to understand why so many people might have expected a blow-out win by Biden. All of the major media polls showed a substantially larger margin over Trump than the actual vote. And all these polls also showed Biden’s lead to be larger than what “the polls” in the Real Clear Politics average showed.

The average margin of these major media polls is 10.2 – an error of 5.7 percentage points. Even if the CBS poll is discounted, because it was conducted in early September, the average margin of Biden’s lead and of the error remain the same.

While some these major media polls were not included in the Real Clear Politics average of “the polls,” they still would have exerted a profound influence during the campaign on how the public viewed the election.

As David Leonhardt of the New York Times wrote, “Dozens of pre-election polls suggested that Joe Biden would beat President Trump by a wide margin, but the race instead came down to one or two percentage points in a handful of states.”

All the major media polls contributed to that false expectation that Biden would win by a wide margin.

Battleground States

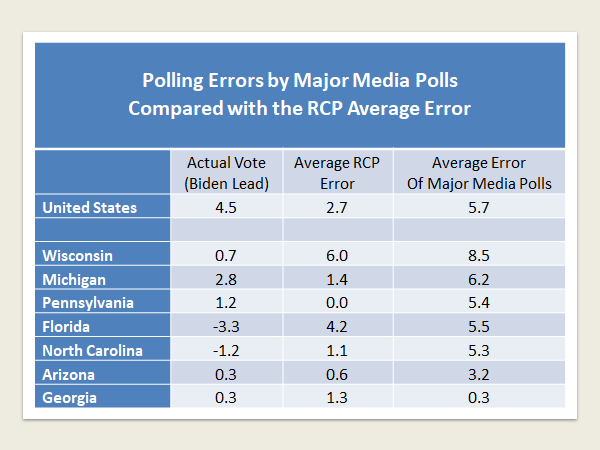

In the key battleground states, there is a similar pattern where the errors of the major media polls are consistently larger than the average RCP error. The table below compares the polling errors by the major media polls with the average RCP error for the seven major battleground states.

For each state, and for the United States, I included only the major media polls that were conducted in the final month of the campaign. Again, not all of these polls met the cut-off date to be included in the Real Clear Politics final average, but their results were part of the national dialogue on how well the candidates were faring. And they consistently overestimated Biden’s lead in most of the key battleground states.

As this table makes clear, except for Georgia – where only CBS and the New York Times/Siena measured voter preferences – the major media polls produced errors that were considerably higher than the average Real Clear Politics error for “the polls.”

The Real Clear Politics average error suggests problems only in Wisconsin and Florida, as Silver and Wilson have noted. But the average errors of the major media polls show a much more significant problem — with an unusually large error nationally, and large errors also in the battleground states of Wisconsin, Michigan, Pennsylvania, Florida, and North Carolina.

We should not be misled by the bromide that The Polls Weren’t Great. But That’s Pretty Normal. Or that The polls weren’t as wrong as you think. They were even worse.

We do need answers about how the polls failed us, but mostly from the major media pollsters.